First, let’s start with the easiest task—translating between two known languages. There are already computer systems that can recognize written and even spoken words and translate them into other languages. While there are limitations to the accuracy of such systems, we should expect vast improvements as we enter the 22nd – 24th centuries. But what about a computer learning a new language through pattern recognition?

This is also something that has already started to happen. Franz Josef Och, a specialist in computer translation, has developed systems that use statistical models allowing computers to learn new languages. The computer is given a document that has already been translated into two different languages. It then compares the two, and identifies corresponding patterns. Words that show up the same number of times and in roughly the same part of the document are matched up. Nearby words are then compared and the process continues. The computer can then be given new text in one of the languages, and translate it to the other.

The accuracy of this method depends upon the quantity and quality of the document the computer learns from. For example, researchers use the text of the Bible because of the volume of information and the availability of translations in many languages.

As impressive as this method its, it still depends on the existence of a previously translated work—a Rosetta Stone of some kind. But how do you boldly translate what no one has translated before?

This is where things get more difficult. Earth languages are remarkably diverse. While American language students struggle with conjugation and gender in a Spanish class, they likely have no concept of declension of nouns and adjectives in Russian, or more “exotic” agglutinative languages that can transform entire English sentences into single words. Navajo differs from English so greatly that it was used during WWII as an “unbreakable code.” And we haven’t even left Earth yet. (For a nice survey of linguistic concepts, read Chapter 7, “Alien Language,” in the book Aliens and Alien Societies, by Stanley Schmidt.)

A computer might identify that an alien said queseppy seventeen times in a conversation. But recognizing that pattern doesn’t get us very far. We don’t know if it’s a noun, a verb, an adjective, a proper name. Placement in the sentence isn’t going to help since there are no universal rules for where different parts of speech appear in a sentence, nor any guarantee that any given language even has the same parts of speech as another.

So in the absence of an existing translation, you can’t rely on statistical analysis alone. You need some other point of reference. And that’s exactly what happens when people learn a previously unknown language.

If two people were stranded together and had no language in common, they might eventually communicate with each other. How? By seeing how what a person says relates to his surroundings, actions, and interactions. Which isn’t to say it would be easy.

In “The Ensigns of Command” (TNG), Counselor Troi used this kind of scenario to illustrate the difficulty of establishing meaningful communication with aliens. She tests Picard by saying a single word and letting him guess what it means. He struggles to do it. The point is somewhat exaggerated, but very true. Even with environmental cues – pointing to an object for example – a single word, phrase, or even sentence simply cannot be understood out of context. There has to be extensive exposure and shared experiences for meaningful, accurate communication.

Any translation device would have to be able to process such information. Schmidt explains it this way: “Furthermore, merely analyzing the structure of a language is not enough—you also have to know how it relates to is subject matter. So science-fictional translators that learn a language simply by learning a sample of it are unconvincing. Such a machine would also have to analyze the speakers, and their surrounding and activities for quite a while. Given the opportunity and ability to do that, a highly sophisticated artificial intelligence might be able to do it.”

We rarely see the UT being given the opportunity for this kind of analysis. One notable exception is the DS9 episode “Sanctuary.” The Skrreeans have to speak in the presence of the computer for quite some time before it can identify their unique speech pattern. We can assume in other instances this has happened, but we just have not witnessed it. But on many occasions we are introduced to an alien race for the very first time and this is glossed over. The UT simply works flawlessly from the very beginning.

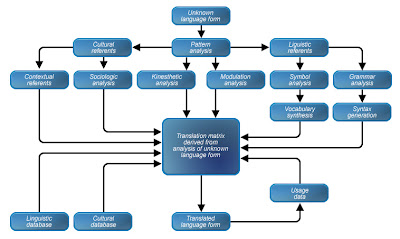

The TNG Technical Manual basically acknowledges all of these issues in its explanation of how the UT works. Below is a diagram based on the one in the book showing how it is all supposed to work. Admittedly this is a non-canon source, but it does not seem to contradict anything shown on screen and is reasonably consistent with what we know of translation technology. However, the diagram neglects to include something that has been mentioned on screen: scanning brain activity. This aspect of the UT will be considered next.

Admittedly this is a non-canon source, but it does not seem to contradict anything shown on screen and is reasonably consistent with what we know of translation technology. However, the diagram neglects to include something that has been mentioned on screen: scanning brain activity. This aspect of the UT will be considered next.